Chapter 3 Process-Based Modeling - Probabilistic and Process Simulation

* Modules 3.1 and 3.2 are adapted from Fabian Nipggen (REWM.4500.500)

3.1 Transfer function rainfall-runoff models

3.1.1 Learning Module 4

3.1.1.1 Summary

In previous modules, we explored how watershed characteristics influence the flow of input water through or over hillslopes to quickly contribute to stormflow or to be stored for later contribution to baseflow. Therefore, the partitioning of flow into baseflow or stormflow can be determined by the time it spends in the watershed. Furthermore, the residence time of water in various pathways may affect weathering and solute transport within watersheds. To improve our understanding of water movement within a watershed, it can be crucial to incorporate water transit time into hydrological models. This consideration allows for a more realistic representation of how water moves through various storage compartments, such as soil, groundwater, and surface water, accounting for the time it takes for water to traverse these pathways.

In this module, we will model the temporal aspects of runoff response to input using a transfer function. First, please read:

TRANSEP - a combined tracer and runoff transfer function hydrograph separation model

Then this chapter will step through key concepts in the paper to facilitate hands-on exploration of the rainfall-runoff portion of the TRANSEP model in the assessment. Then, we will introduce examples of other transfer functions to demonstrate alternative ways of representing time-induced patterns in hydrological modeling, prompting you to consider response patterns in your study systems.

3.1.1.2 Overall Learning Objectives

At the end of this module, students should be able to describe several ways to model and identify transit time within hydrological models. They should have a general understanding of how water transit time may influence the timing and composition of runoff.

3.1.1.3 Terminology

In modeling a flow system, note that consideration of time may vary depending on the questions being asked. Transit time is the average time required for water to travel through the entire flow system, from input (e.g., rainfall on soil surface) to output (e.g., discharge). Residence time is a portion of transit time, describing the amount of time water spends within a specific component of the flow system, like storage (e.g., in soil, groundwater, or a lake).

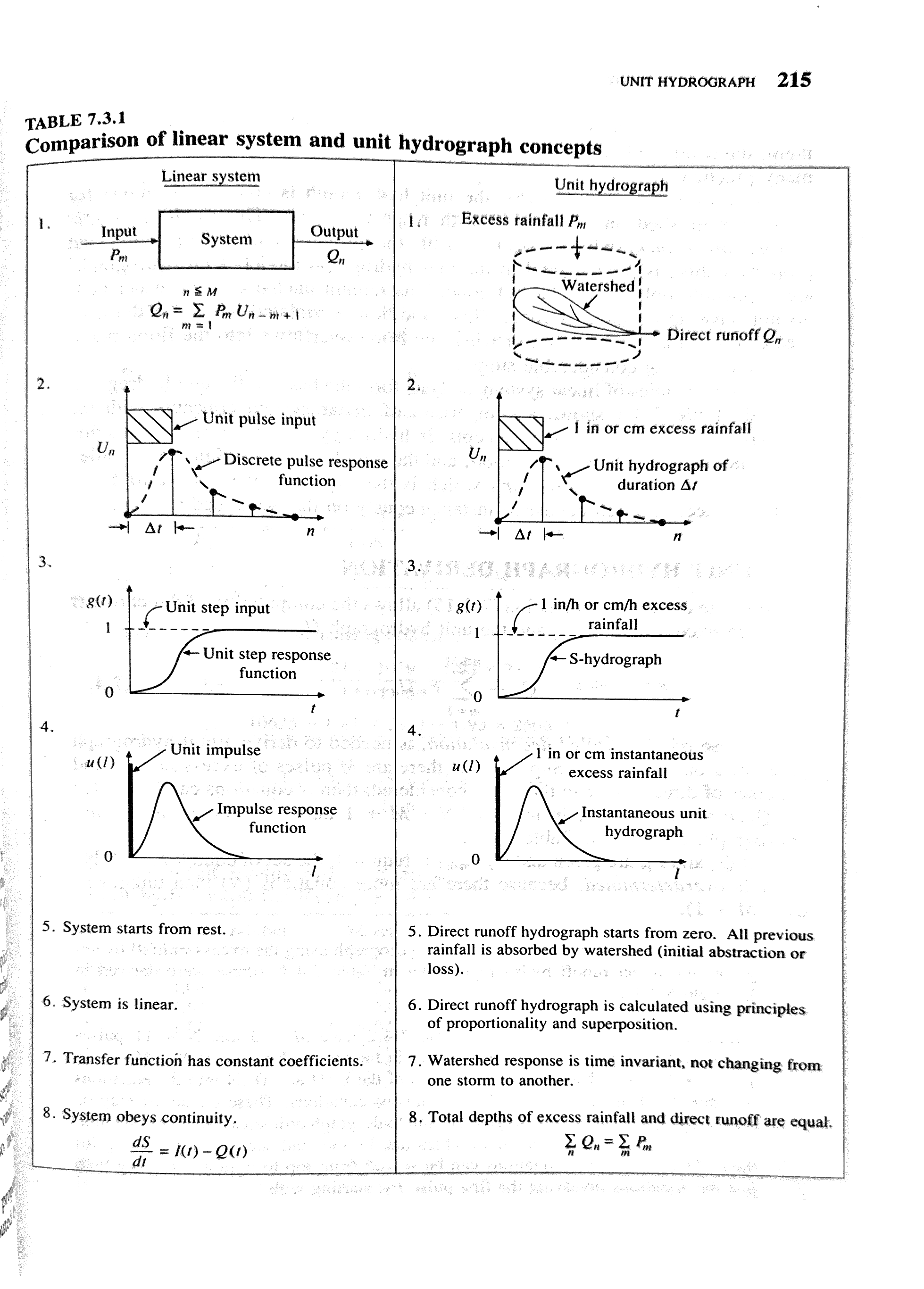

A transfer function (TF) is a mathematical representation of how a system responds to input signals. In a hydrological context, it describes the transformation of inputs (e.g. precipitation) to outputs (e.g. runoff). These models can be valuable tools for understanding the time-varying dynamics of a hydrological system.

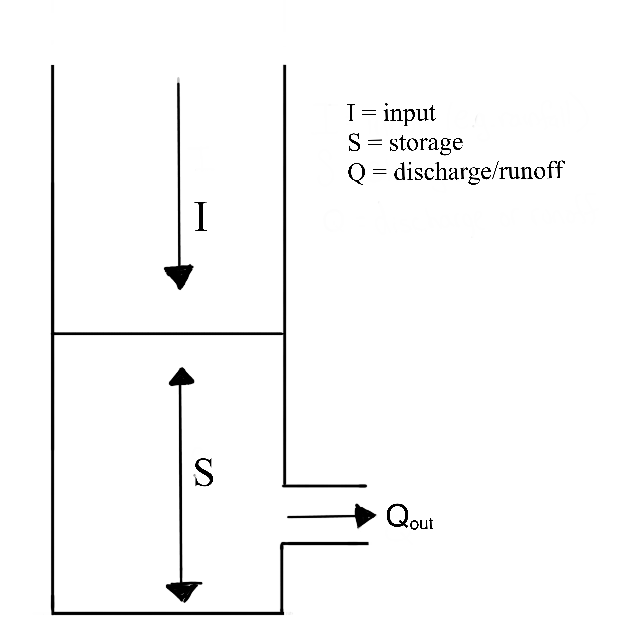

3.1.1.4 The Linear Time-Invariant TF

We’ll begin the discussion in the context of a linear reservoir. Linear reservoirs are simple models designed to simulate the storage and discharge of water in a catchment. These models assume that the catchment can be represented as single storage compartments or as a series of interconnected storage compartments and that the change the amount of water stored in the reservoir (or reservoirs) is directly proportional to the inflows and outflows. In other words, the linear relationship between inflows and outflows means that the rate of water release is proportional to the amount of water stored in the reservoir.

3.1.1.4.1 The Instantaneous Unit Hydrograph:

The Instantaneous Unit Hydrograph (IUH) represents the linear rainfall-runoff model used in the TRANSEP model. It is an approach to hydrograph separation that is useful for analyzing the temporal distribution of runoff in response to a ‘unit’ pulse of rainfall (e.g. uniform one-inch depth over a unit area represented by a unit hydrograph). In other words, it is a hydrograph that results from one unit (e.g. 1 mm) of effective rainfall uniformly distributed over the watershed and occurring in a short duration. Therefore, the following assumptions are made when the IUH is used as a transfer function:

1. the IUH reflects the ensemble of watershed characteristics

2. the shape characteristics of the unit hydrograph are independent of time

3. the output response is linearly proportional to the input

By interpreting the IUH as a transfer function, we can model how the watershed translates rainfall into runoff. In the TRANSEP model, this transfer function is represented as \(g(\tau)\) and thus the rainfall-induced response to runoff.

\[

g(\tau) = \frac{\tau^{\alpha-1}}{\mathrm{B}^{\alpha}\Gamma(\alpha)}exp(-\frac{\tau}{\alpha})

\]

The linear portion of the TRANSEP model describes a convolution of the effective precipitation and a runoff transfer function.

\[

Q(t)= \int_{0}^{t} g(\tau)p_{\text{eff}}(t-\tau)d\tau

\]

Whoa, wait…what? Tau, integrals, and convolution? Don’t worry about the details of the equations. Check out this video to have convolution described using dollars and cookies, then imagine each dollar as a rainfall unit and each cookie as a runoff unit. Review the equations again after the video.

3.1.1.4.2 The Loss Function:

The loss function represents the linear rainfall-runoff model used in the TRANSEP model.

\[

s(t) = b_{1} p(t + 1 - b_{2}^{-1}) s(t - \triangle t)

\]

\[

s(t = 0) = b_{3}

\]

\[

p_{\text{eff}}(t) = p(t) s(t)

\]

where \(p_{\text{eff}}(t)\) is the effective precipitation.

\(s(t)\) is the antecedent precipitation index which is determined by giving more importance to recent precipitation and gradually reducing that importance as we go back in time.

The rate at which this importance decreases is controlled by the parameter \(b_{2}\).

The parameter \(b_{3}\) sets the initial antecedent precipitation index at the beginning of the simulated time series.

In other words, these equations are used to simulate the flow of water in a hydrological system over time. The first equation represents the change in stored water at each time step, taking into account precipitation, loss to runoff, and the system’s past state. The second equation sets the initial condition for the storage at the beginning of the simulation. The third equation calculates the effective precipitation, considering both precipitation and the current storage state.

3.1.1.4.3 How do we code this?

We will use a skeletal version of TRANSEP, focusing only on the rainfall-runoff piece which includes the loss-function and the gamma transfer function.

We will use rainfall and runoff data from TCEF to model annual streamflow at a daily time step. Then we can use this model as a jump-off point to start talking about model calibration and validation in future modules.

3.1.1.4.4 Final thoughts:

If during your modeling experience, you find yourself wading through a bog of complex physics and multiple layers of transfer functions to account for every drop of input into a system, it is time to revisit your objectives. Remember that a model is always ‘wrong’. Like a map, it provides a simplified representation of reality. It may not be entirely accurate, but it serves a valuable purpose. Models help us understand complex systems, make predictions, and gain insights even if they are not an exact replica of the real world. Check out this paper for more:

https://agupubs.onlinelibrary.wiley.com/doi/10.1029/93WR00877

3.1.3 Labwork (20 pts)

In this homework/lab, you will write a simple, lumped rainfall-runoff model. The foundation for this model is the TRANSEP model from Weiler et al., 2003. Since TRANSEP (tracer transfer function hydrograph separation model) contains a tracer module that we don’t need, we will only use the loss function (Jakeman and Hornberger) and the gamma transfer function for the water routing.

The data for the model is from the Tenderfoot Creek Experimental Forest in central Montana.

Load packages with a function that checks and installs packages if needed:

Define input year We could use every year in the time series, but for starters, we’ll use 2006. Use filter() to extract the 2006 water year.

1) QUESTION: What does the function cumsum() do?(1 pt) ANSWER:

Define the initial inputs

This chunk defines the initial inputs for measured precip and measured runoff.

Parameterization

We will use these parameters. You can change them if you want, but I’d suggest leaving them like this at least until you get the model to work. Even tiny changes can have a huge effect on the simulated runoff.

Loss function

This is the module for the Jakeman and Hornberger loss function where we turn our measured input precip into effective precipitation (p_eff). This part contains three steps.

1) preallocate a vector p_eff: Initiate an empty vector for effective precipitation that we will fill in with a loop using Peff(t) = p(t)s(t). Effective precipitation is the portion of precipitation that generates streamflow and event water contribution to the stream. It is separated to produce event water and displace pre-event water into the stream.

2) set the initial value for s: s(t) is an antecedent precipitation index. How much does antecedent precipitation affect effective precipitation?

3) generate p_eff inside of a for-loop

Please note: The Weiler et al. (2003) paper states that one of the loss function parameters (vol_c) can be determined from the measured input. That is actually not the case.

s(t) is the antecedent precipitation index that is calculated by exponentially weighting the precipitation backward in time according to the parameter b2 is a ‘dial’ that places weight on past precipitation events.

2) QUESTION (3 pts): Interpret the two figures. What is the meaning of “frac”? For this answer, think about what the effective precipitation represents. When is frac “high”, when is “frac” low?

ANSWER:

Extra Credit (2 pt): Combine the two plots into one, with a meaningfully scaled secondary y-axis

Runoff transfer function

Routing module -

This short chunk sets up the TF used for the water routing. This part contains only two steps: 1) the calculation of the actual TF. 2) normalization of the TF so that the sum of the TF equals 1.

tau(0) is the mean residence time

3) QUESTION (2 pt): Why is it important to normalize the transfer function?

ANSWER:

4) QUESTION (4 pt): Describe the transfer function. What are the units and what does the shape of the transfer function mean for runoff generation?

ANSWER:

Convolution

This is the heart of the model. Here, we convolute the input with the TF to generate runoff. There is another thing you need to pay attention to: We are only interested in one year (365 days), but since our TF itself is 365 timesteps long, small parts of all inputs except for the first one, will be turned into streamflow AFTER the water year of interest, that is, in the following year. In practice, this means you have two options to handle this.

1) You calculate q_all and then manually cut the matrix/vector to the correct length at the end, or

2) You only populate a vector at each time step and put the generated runoff per iteration in the correct locations within the vector. For this to work, you would need to trim the length of the generated runoff by one during each iteration. This approach is more difficult to code, but saves a lot of memory since you are only calculating/storing one vector of length 365 (or 366 during a leapyear).

We will go with option 1). The code for option 2 is shown at the end for reference.

Convolution summarized (recall dollars and cookies): Each loop iteration results in a row of the matrix representing the convolution at a specific time step. each time step of p_eff is an iteration, and for each timestep, it multiplies the effective precipitation at that timestep by the entire transfer function. Then each row is summed and stored in the vector q_all. q_all_loop is an intermediate step in the convolution process and can help visualize how the convolution evolves over time. As an example, if we have precip at time step 1, we are interested in how this contributes to runoff at time steps 1,2,3 etc, all the way to 365 (or the end of our period). so the first row of the matrix q_all_loop represents the contribution of precipitation at timestep 1 to runoff at each timestep. the second row represents the contribution of precipitation at time step 2 to runoff at each timestep. Then when we sum up the rows, we get q_all, where each element represents the total runoff at a specific time step.

5) QUESTION (5 pts): We set the TF length to 365. What is the physical meaning of this (i.e., how well does this represent a real system and why)? Could the transfer function be shorter or longer than that?

ANSWER:

Plots

Plot the observed and simulated runoff. Include a legend and label the y-axis.

6) QUESTION (3 pt): Evaluate how good or bad the model performed (i.e., visually compare simulated and observed streamflow, e.g., low flows and peak flows).

ANSWER:

7) QUESTION (2 pt): Compare the effective precipitation total with the simulated runoff total and the observed runoff total. What is p_eff and how is it related to q_all? Discuss why there is a (small) mismatch between the sums of p_eff and q_all.

ANSWER:

THIS IS THE CODE FOR CONVOLUTION METHOD 2

This method saves storage requirements since only a vector is generated and not a full matrix that contains all response pulses. The workflow here is to generate a a response vector for the first pulse. This will take up 365 time steps. On the second time step, we generate another response pulse with 364 steps that starts at t=2. We then add that vector to the first one. On the third time step, we generate a response pulse of length 363 that starts at t=3 and then add it to the existing one. And so on and so forth.

3.2 Monte Carlo Simulation

3.2.1 Learning Module 5

3.2.1.1 Background:

Monte Carlo Simulation is a method to estimate the probability of the outcomes of an uncertain event. It is based on a law of probability theory that says if we repeat an experiment many times, the average of the results will get closer to the true probability of those outcomes.

First check out this video:

3.2.1.2 Reading

Then read this: https://www.ncbi.nlm.nih.gov/pmc/articles/PMC2924739/ for a understanding of the fundamentals.

3.2.1.3 How does this apply to hydrological modeling?

When modeling watershed hydrological processes, we often attempting to quantify

- watershed inputs

- e.g., precipitation

- e.g., precipitation

- watershed outputs

- e.g., evapotranspiration, sublimation, runoff/discharge

- e.g., evapotranspiration, sublimation, runoff/discharge

- watershed storage

- precipitation that is not immediately converted to runoff or ET rather stored as snow or subsurface water.

- precipitation that is not immediately converted to runoff or ET rather stored as snow or subsurface water.

Imagine we are trying to predict the percentage of precipitation stored in a watershed after a storm event. We have learned that there are may factors that affect this prediction, like antecedent conditions, that may be difficult to measure directly. Monte Carlo Simulation can offer a valuable approach to estimate the probability of obtaining certain measurements when those factors can not be directly observed or measured. We can approximate the likelihood of specific measurement by simulating a range of possible scenarios.

Monte Carlo Simulation is not only useful for estimating probabilities, but for conducting sensitivity analysis. In any model, there are usually several input parameters. Sensitivity analysis helps us understand how changes in these parameters affect the predicted values. To perform a sensitivity analysis using a Monte Carlo Simulation we can:

- Define the realistic ranges for each parameter we want to analyze

- Using Monte Carlo Simulation, randomly sample values from the defined ranges for each parameter

- Analyze output to understand how different input sample values affect the predicted output

3.2.1.4 Example

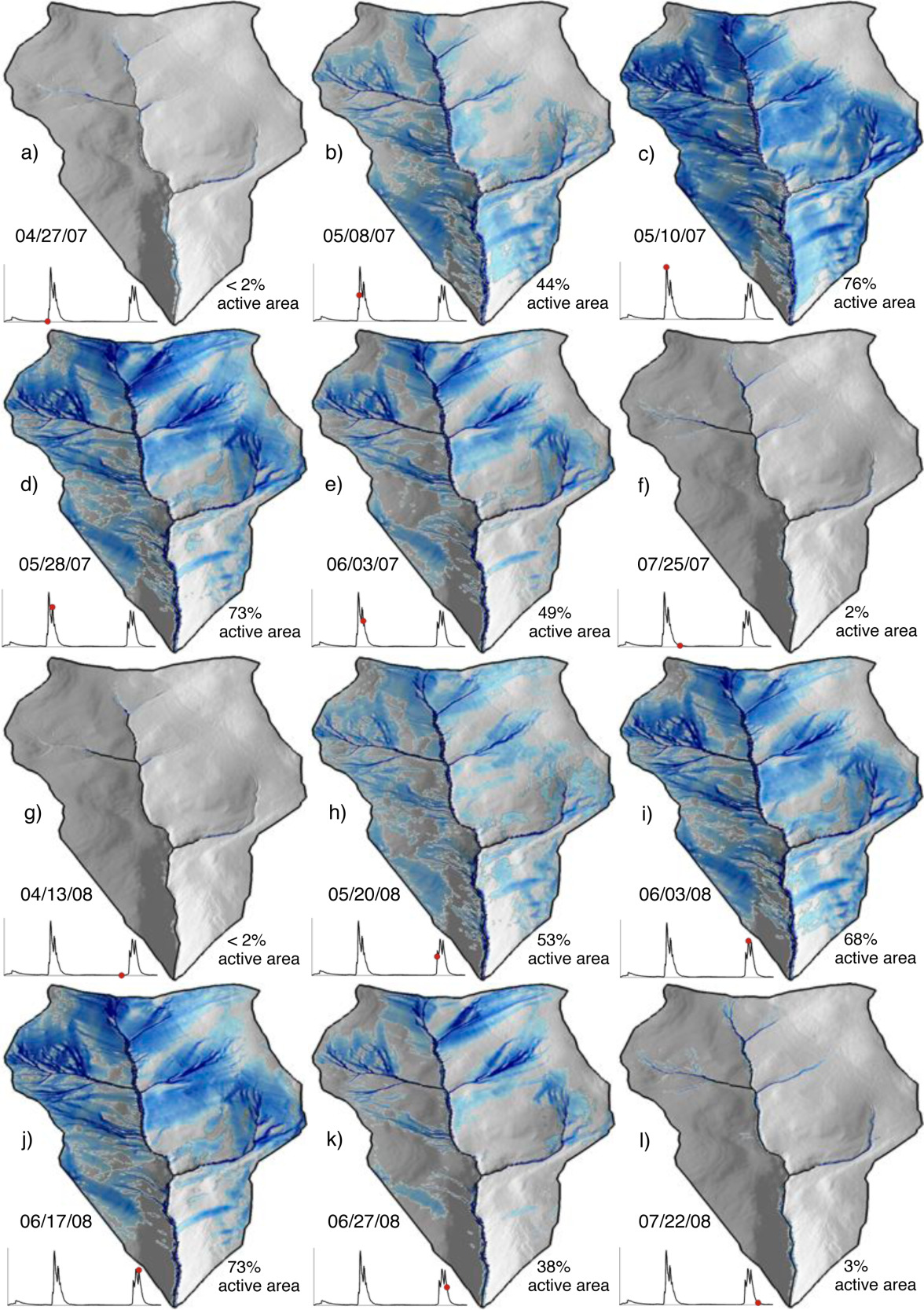

Let’s consider all of this in an example model called WECOH - Watershed ECOHydrology. In this study, researchers (Nippgen et al.) were interested in the change in subsurface water storage through time and space on a daily and seasonal basis.

The authors directly measured runoff/discharge, collected precipitation data at several points within the watershed, used remote sensing to estimate how much water was lost to evapotranspiration, and used digital elevation models to characterize the watershed topography. As we learned in the hydrograph separation module, topographic characteristics can have a significant impact on storage.

Though resources like USDA’s Web Soil Survey can provide a basic understanding underlying geology across a large region, characterizing the heterogeneous nature of soils within a watershed can be logistically unfeasible. To estimate the soil characteristics like storage capacity (how much water the soil can hold) and hydraulic conductivity (how easily water can move through soil) in the study watershed, the authors used available resources to determine the possible range of values for each of their unknown parameters. They then tested thousands of model simulations using randomly selected values with the predetermined range for each of the soil characteristics. They compared the simulated discharge from these simulations to the actual discharge measurements. The simulations that predicted discharge patterns that closely matched reality helped them to estimate the unknown soil properties. Additionally, from the results of these simulations, they could identify which model inputs had the most significant impact on the discharge predictions, and how sensitive the output was to changes in each parameter. In this case, they determined that the model and system were most sensitive to precipitation. This type of sensitivity analysis can help us interpret the relative importance of different parameters and understand the overall sensitivity of the model or system. The study is linked for your reference but a thorough reading is not required.

3.2.1.5 Optional Reading

This methods paper by Knighton et al. is an example of how Monte Carlo Simulation was used to estimate hydraulic conductivity in an urban system with varied land-cover..

3.2.1.6 Generate a simulation with code

For a 12-minute example in RStudio, check this out. If you are still learning the basics of R functionality, it may be helpful to code along with this video, pausing as needed. Note that this instruction is coding in an Rscript (after opening RStudio > File > New File > R Script), rather than an Rmarkdown that we use in this class.

3.2.3 Labwork (20 pnts)

In this lab/homework, you will use the transfer function model from the previous module for some sensitivity analysis using Monte Carlo simulations. The code is mostly the same as last module with some small adjustments to save the parameters from each Monte Carlo run. In this Monte Carlo simulation, we’re testing how different parameter values affect simulated streamflow (discharge). Since we don’t know the true values of certain model parameters (like b1, b2, b3, a, and b that you may recall from the transfer function), we randomly sample them within a reasonable range and run the model many times to see which parameter combinations best match observed streamflow data. Each iteration of the loop represents one possible “guess” at the true parameter values, and we evaluate how good that guess is using metrics like Nash-Sutcliffe Efficiency (NSE), Kling-Gupta Efficiency (KGE), Root Mean Square Error (RMSE), and Mean Absolute Error (MAE). After the completion of the MC runs, we will use the Generalized Likelihood Uncertainty estimation (GLUE) method to evaluate parameter sensitivity. In other words, we sort all the runs to find the best-fitting parameter combinations, which helps us understand the most likely values of these unknown parameters in our hydrological model.

There are three main objectives for this homework:

1) Set up the Monte Carlo analysis

2) Run the MC simulation for ONE of the years in the study period and perform a GLUE sensitivity analysis

3) Compare the different objective functions.

A lot of the code in this homework will be provided.

3.2.3.1 Setup

Import packages, including the “progress” and “tictoc” packages. These will allow us to time our loops and functions

Read data - this is the same PQ data we have worked with in previous modules.

Define variables

3.2.3.2 Parameter initialization

This chunk has two purposes. The first is to set up the number of iterations for the Monte Carlo simulation. The entire model code is essentially wrapped into the main MC for loop. Each iteration of that loop is one full model realization: loss function, TF, convolution, model fit assessment (objective function). For each model run (each MC iteration), we will save the model parameters and respective objective functions in a dataframe. This will be the main source for the GLUE sensitivity analysis at the end. The model parameters are sampled in the main model chunk, this is just the preallocation of the dataframe.

You will both run your own MC simulation, to set up the code, but will also receive a .Rdata file with 100,000 runs to give you more behavioral runs for the sensitivity analysis and uncertainty bounds. As a tip, while setting up the code, I would recommend setting the number of MC iterations to something low, for example 10 or maybe even 1. Once you have confirmed that your code works, crank up the number of iterations. Set it to 1000 to see how many behavioral runs you get. After that, load the file with the provided runs.

3.2.3.3 MC Model run

This is the main model chunk. The tic() and toc() statements measure the execution time for the whole chunk. There is also a progressbar in the chunk that3 will run in the console and inform you about the progress of the MC simulation. The loss function parameters are set in the loss function, the TF parameters in the loss function code. For each loop iteration, we will store the parameter values and the simulated discharge in the “param” dataframe. So, if we ran the MC simulation 100 times, we would end up with 100 parameter combinations and simulated discharges.

Q1 (2 pt) How are the loss function and transfer function parameters being sampled? That is, what is the underlying distribution and why did we choose it? (2-3 sentences)

ANSWER:

Extra point: What does the while loop do in the transfer function calculation? And why is it in there? (1-2 sentences)

ANSWER:

Save or load data

After setting up the MC simulation, we will actually use a pre-created dataset. Running the MC simulation tens of thousands of times will take multiple hours. For that reason, we have done this for you and saved it as an importable data set.

Best run

Now that we have the dataframe with all parameter combinations and all efficiencies, we can plot the best simulation and compare it to the observed discharge

3.2.4 Sensitivity analysis

We will use the GLUE methodology to assess parameter sensitivity. We will use GLUE for two purposes: 1) Assessing parameter sensitivity – Understanding how different parameter values influence model performance 2) Creating an envelope of model simulations – Identifying a range of possible model outputs that fit the observed data

To start, we need to format the data so we can create dotty plots and density plots using Nash-Sutcliffe Efficiency (NSE) as our performance metric.

When plotting, each parameter should be displayed in its own panel. You can achieve this using facet_wrap() in ggplot2, and be sure to set the axis scaling to “free” so each parameter is properly visualized

Q2 (4 pts) Describe the sensitivity analysis with the three different plots. Are the parameters sensitive? Which ones are, which ones are not? Does this affect your “trust” in the model? (5-8 sentences)

ANSWER:

Q3 (4 pts) What are the differences between the dotty plots and the density plots? What are the differences between the two density plots? (2-3 sentences) ANSWER:

Uncertainty bounds In this section, we will generate uncertainty bounds for our simulation results. Instead of using all model runs, we will only include the behavioral runs—the ones that meet our performance criteria. This ensures that our uncertainty range reflects only the most realistic simulations.

Q4 (3 pts) Describe what the envelope actually is. Could we say we are dealing with confidence or prediction intervals? (2-3 sentences)

Hint: Think about what the envelope is capturing. Does it reflect uncertainty in the model structure and parameters or does it describe the variability in future observations?

ANSWER:

Q5 (3 pts) If you inspect the individual model runs (in the Qruns df), you will notice that they all perform somewhat poorly when it comes to the initial baseflow. Why is that and what could you do to change this? (Note: you don’t have to actually do this, just describe how you might approach that issue (2-3 sentences)

Hint: Consider factors such as parameter initialization, storage effects, or missing processes in the model structure. What tuning or modifications could address this?

ANSWER:

Q6 (4 pts) Run the provided code to generate a plot comparing the best model run for each objective function. Then, analyze the differences in model performance by answering the following: Which objective function provides the best match to observed runoff overall? How do the different functions perform in capturing peak flow events? Which function best represents baseflow conditions? Which struggles the most? If you were to prioritize accuracy in low-flow conditions, which objective function might you choose and why?** ANSWER:

The coding steps are: 1) get the simulated q vector with the best run for each objective function, 2) put them in a dataframe/tibble, 3) create the long form, 4) plot the long form dataframe/tibble. These steps are just ONE possible outline how the coding steps can be broken up.

Response surface