Chapter 3 Transfer function rainfall-runoff models

3.1 Summary

In previous modules, we explored how watershed characteristics influence the flow of input water through or over hillslopes to quickly contribute to stormflow or to be stored for later contribution to baseflow. Therefore, the partitioning of flow into baseflow or stormflow can be determined by the time it spends in the watershed. Furthermore, the residence time of water in various pathways may affect weathering and solute transport within watersheds. To improve our understanding of water movement within a watershed, it can be crucial to incorporate water transit time into hydrological models. This consideration allows for a more realistic representation of how water moves through various storage compartments, such as soil, groundwater, and surface water, accounting for the time it takes for water to traverse these pathways.

In this module, we will model the temporal aspects of runoff response to input using a transfer function. First, please read:

TRANSEP - a combined tracer and runoff transfer function hydrograph separation model

Then this chapter will step through key concepts in the paper to facilitate hands-on exploration of the rainfall-runoff portion of the TRANSEP model in the assessment. Then, we will introduce examples of other transfer functions to demonstrate alternative ways of representing time-induced patterns in hydrological modeling, prompting you to consider response patterns in your study systems.

3.2 Overall Learning Objectives

At the end of this module, students should be able to describe several ways to model and identify transit time within hydrological models. They should have a general understanding of how water transit time may influence the timing and composition of runoff.

3.3 Terminology

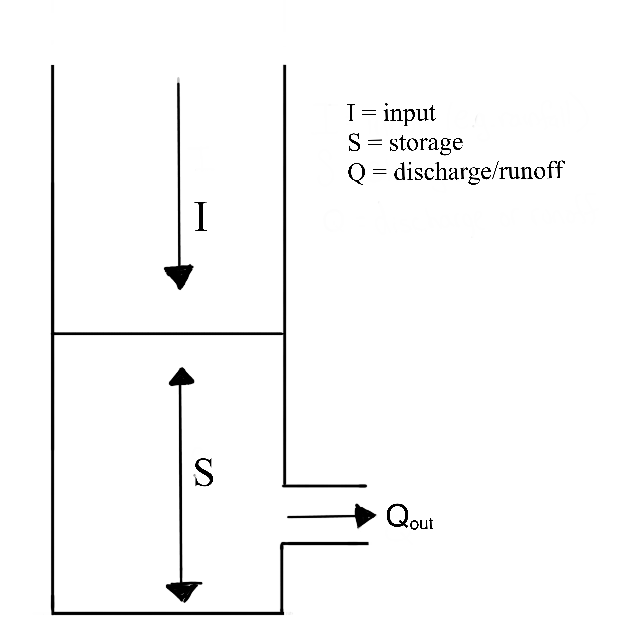

In modeling a flow system, note that consideration of time may vary depending on the questions being asked. Transit time is the average time required for water to travel through the entire flow system, from input (e.g., rainfall on soil surface) to output (e.g., discharge). Residence time is a portion of transit time, describing the amount of time water spends within a specific component of the flow system, like storage (e.g., in soil, groundwater, or a lake).

A transfer function (TF) is a mathematical representation of how a system responds to input signals. In a hydrological context, it describes the transformation of inputs (e.g. precipitation) to outputs (e.g. runoff). These models can be valuable tools for understanding the time-varying dynamics of a hydrological system.

3.4 The Linear Time-Invariant TF

We’ll begin the discussion in the context of a linear reservoir. Linear reservoirs are simple models designed to simulate the storage and discharge of water in a catchment. These models assume that the catchment can be represented as single storage compartments or as a series of interconnected storage compartments and that the change the amount of water stored in the reservoir (or reservoirs) is directly proportional to the inflows and outflows. In other words, the linear relationship between inflows and outflows means that the rate of water release is proportional to the amount of water stored in the reservoir.

3.4.0.1 The Instantaneous Unit Hydrograph:

The Instantaneous Unit Hydrograph (IUH) represents the linear rainfall-runoff model used in the TRANSEP model. It is an approach to hydrograph separation that is useful for analyzing the temporal distribution of runoff in response to a ‘unit’ pulse of rainfall (e.g. uniform one-inch depth over a unit area represented by a unit hydrograph). In other words, it is a hydrograph that results from one unit (e.g. 1 mm) of effective rainfall uniformly distributed over the watershed and occurring in a short duration. Therefore, the following assumptions are made when the IUH is used as a transfer function:

1. the IUH reflects the ensemble of watershed characteristics

2. the shape characteristics of the unit hydrograph are independent of time

3. the output response is linearly proportional to the input

Peak discharge of the unit hydrograph, \(u_p\);

Base time \(t_b\)is the total duration of the unit hydrograph;

Increase time or time to peak \(t_p\) is the time between the start point of the hydrograph and the peak;

Concentration time \(t_c\) is the time between the end of rainfall and the end of the hydrograph;

Lag time \(t_lag\) is the time between half rainfall depth and the peak of the hydrograph.

IUH as a transfer function allows the calculation of the direct runoff hydrograph for any given rainfall input. IUH is particularly valuable for understanding how the direct runoff is distributed over time in response to a rainfall event. It helps quantify the time distribution of runoff in relation to the rainfall input.

In the TRANSEP model, this transfer function is represented as \(g(\tau)\) and thus the rainfall-induced response to runoff.

\[

g(\tau) = \frac{\tau^{\alpha-1}}{\mathrm{B}^{\alpha}\Gamma(\alpha)}exp(-\frac{\tau}{\alpha})

\]

The linear portion of the TRANSEP model describes a convolution of the effective precipitation and a runoff transfer function.

\[

Q(t)= \int_{0}^{t} g(\tau)p_{\text{eff}}(t-\tau)d\tau

\]

Whoa, wait…what? Tau, integrals, and convolution? Don’t worry about the details of the equations. Check out this video to have convolution described using dollars and cookies, then imagine each dollar as a rainfall unit and each cookie as a runoff unit. Review the equations again after the video.

3.4.0.2 The Loss Function:

The loss function represents the linear rainfall-runoff model used in the TRANSEP model.

\[

s(t) = b_{1} p(t + 1 - b_{2}^{-1}) s(t - \triangle t)

\]

\[

s(t = 0) = b_{3}

\]

\[

p_{\text{eff}}(t) = p(t) s(t)

\]

where \(p_{\text{eff}}(t)\) is the effective precipitation.

\(s(t)\) is the antecedent precipitation index which is determined by giving more importance to recent precipitation and gradually reducing that importance as we go back in time.

The rate at which this importance decreases is controlled by the parameter \(b_{2}\).

The parameter \(b_{3}\) sets the initial antecedent precipitation index at the beginning of the simulated time series.

In other words, these equations are used to simulate the flow of water in a hydrological system over time. The first equation represents the change in stored water at each time step, taking into account precipitation, loss to runoff, and the system’s past state. The second equation sets the initial condition for the storage at the beginning of the simulation. The third equation calculates the effective precipitation, considering both precipitation and the current storage state.

3.4.0.3 How do we code this?

We will use a skeletal version of TRANSEP, focusing only on the rainfall-runoff piece which includes the loss-function and the gamma transfer function.

We will use rainfall and runoff data from TCEF to model annual streamflow at a daily time step. Then we can use this model as a jump-off point to start talking about model calibration and validation in future modules.

3.4.0.4 Final thoughts:

If during your modeling experience, you find yourself wading through a bog of complex physics and multiple layers of transfer functions to account for every drop of input into a system, it is time to revisit your objectives. Remember that a model is always ‘wrong’. Like a map, it provides a simplified representation of reality. It may not be entirely accurate, but it serves a valuable purpose. Models help us understand complex systems, make predictions, and gain insights even if they are not an exact replica of the real world. Check out this paper for more:

https://agupubs.onlinelibrary.wiley.com/doi/10.1029/93WR00877

3.5 Codework - Transfer function rainfall-runoff model

3.5.1 Download the repo for this lab HERE

In this homework/lab, you will write a simple, lumped rainfall-runoff model. The foundation for this model is the TRANSEP model from Weiler et al., 2003. Since TRANSEP (tracer transfer function hydrograph separation model) contains a tracer module that we don’t need, we will only use the loss function (Jakeman and Hornberger) and the gamma transfer function for the water routing.

The data for the model is from the Tenderfoot Creek Experimental Forest in central Montana.

Load packages with a function that checks and installs packages if needed:

#

# # Write your package testing function

# pkgTest <- function(x)

# {

# if (x %in% rownames(installed.packages()) == FALSE) {

# install.packages(x, dependencies= TRUE)

# }

# library(x, character.only = TRUE)

# }

#

# # Make a vector of the packages you need

# neededPackages <- c('tidyverse', 'lubridate', 'tictoc', 'patchwork') #tools for plot titles

#

# # For every package in the vector, apply your pkgTest function

# for (package in neededPackages){pkgTest(package)}

#

# # tictoc times the execution of different modeling methods

# # patchwork is used for side-by-side plots# # Let's assume that you know you put your data file in the working directory, but cannot recall its name. Let's do some working directory exploration with script:

#

# # Check your working directory:

# print(getwd())

#

# # Check the datafile name or path by listing files in the working directory.

# filepaths <-list.files()

#

# # Here is an option to list only .csv files in your working directory:

# csv_files <- filepaths[grepl(".csv$", filepaths, ignore.case = TRUE)]

# print(csv_files)

# ```

#

# #### Read in the data, convert the column that has the date to a (lubridate) date, and add a column that contains the water year

# # Identify the path to the desired data.

# filepath <- "P_Q_1996_2011.csv"

# indata <- read.csv(filepath)

#

# indata <- indata %>%

# mutate(Date = mdy(Date)) %>% # convert "Date" to a date object with mdy()

# mutate(wtr_yr = if_else(month(Date) > 9, year(Date) + 1, year(Date)))Define input year We could use every year in the time series, but for starters, we’ll use 2006. Use filter() to extract the 2006 water year.

# PQ <- indata %>%

# filter(wtr_yr == 2006) # extract the 2006 water year with filter()

#

# # plot discharge for 2006

# ggplot(PQ, aes(x = Date, y = Discharge_mm)) +

# geom_line()

#

# # make flowtime correction - flowtime is a time-weighted cumulative flow, which aids in understanding the temporal distribution of flow, giving more weight to periods of higher discharge. flow time correction is relevant in hydrology when analyzing Q or time series data, so we can compare hydrological events on a standardized time scale. It can help to identify patterns, assess the duration of high or low flows, and compare behavior of watersheds over time.

#

# PQ <- PQ %>%

# mutate(flowtime = cumsum(Discharge_mm)/mean(Discharge_mm)) %>%

# mutate(counter = 1:nrow(PQ))

#

# ggplot() +

# geom_line(data=PQ, aes(x = flowtime, y = Discharge_mm)) +

# geom_line(data=PQ, aes(x = counter, y = Discharge_mm), color="red")**1) QUESTION: What does the function cumsum() do?(1 pt) ANSWER:

Define the initial inputs

This chunk defines the initial inputs for measured precip and measured runoff

# tau <- 1:nrow(PQ) # simple timestep counter the same length as PQ

# Pobs <- PQ$RainMelt_mm # observed precipitation from PQ df

# Qobs <- PQ$Discharge_mm # observed streamflow from PQ dfParameterization

We will use these parameters. You can change them if you want, but I’d suggest leaving them like this at least until you get the model to work. Even tiny changes can have a huge effect on the simulated runoff.

# # Loss function parameters

# b1 <- 0.0018 # volume control parameter (b1 in eq 4a)

# b2 <- 50 # backwards weighting parameter (b2 in eq 4a)

# # determines how much weight or importance is given to past precipitation events when calculating an antecedent precipitation index. "Exponential weighting backward in time" means that the influence of past precipitation events diminishes as you move further back in time, and this diminishing effect follows an exponential pattern.

#

# b3 <- 0.135 # initial s(t) value for s(t=0) (b3 in eq 4b) - Initial antecedent precipitation index value.

#

# # Transfer function parameters

# a <- 1.84 # TF shape parameter

# b <- 3.29 # TF scale parameterLoss function

This is the module for the Jakeman and Hornberger loss function where we turn our measured input precip into effective precipitation (p_eff). This part contains three steps.

1) preallocate a vector p_eff: Initiate an empty vector for effective precipitation that we will fill in with a loop using Peff(t) = p(t)s(t). Effective precipitation is the portion of precipitation that generates streamflow and event water contribution to the stream. It is separated to produce event water and displace pre-event water into the stream.

2) set the initial value for s: s(t) is an antecedent precipitation index. How much does antecedent precipitation affect effective precipitation?

3) generate p_eff inside of a for-loop

Please note: The Weiler et al. (2003) paper states that one of the loss function parameters (vol_c) can be determined from the measured input. That is actually not the case.

s(t) is the antecedent precipitation index that is calculated by exponentially weighting the precipitation backward in time according to the parameter b2 is a ‘dial’ that places weight on past precipitation events.

# # preallocate the p_eff vector

# p_eff <- vector(mode = "double", length(tau))

#

# s <- b3 # at this point, s is equal to b3, the start value of s

#

# # loop with loss function

# for (i in 1:length(p_eff)) {

# s <- b1 * Pobs[i] + (1 - 1/b2) * s # this is eq 4a from Weiler et al. (2003)

# p_eff[i] <- Pobs[i] * s # this is eq 4c from Weiler et al. (2003)

# }

#

#

# # #### alternative way to calculate p_eff by populating an s vector

# # preallocate s_alt vector

# # s_alt <- vector(mode = "double", length(tau))

# # s_alt[1] <- b3

# # # preallocate p_eff vector

# # p_eff_new <- vector(mode = "double", length(tau))

# # # start loop

# # for (i in 2:(length(p_eff))) {

# # s_alt[i] <- b1 * Pobs[i] + (1 - 1/b2) * s_alt[i-1]

# # p_eff_new[i] <- Pobs[i] * s_alt[i]

# # }

# ####

# # set a starting value for s

#

#

# ## plot observed and effective precipitation against the date

# # wide to long with pivot_longer()

# precip <- tibble(date = PQ$Date, obs = Pobs, sim = p_eff) %>%

# pivot_longer(names_to = "key", values_to = "value", -date)

#

#

# # a good way to plot P time series is with a step function. ggplot2 has geom_step() for this.

# ggplot(precip, aes(x = date, y = value, color = key)) +

# geom_step() +

# labs(color = "P")

#

#

# ## plot the ratio of p_eff/Pobs (call that ratio "frac")

# precip_new <- tibble(date = PQ$Date, obs = Pobs, sim = p_eff, frac = p_eff/Pobs)

#

# ggplot(data = precip_new, aes(x = date, y = frac)) +

# geom_line()**2) QUESTION (3 pts): Interpret the two figures. What is the meaning of “frac”? For this answer, think about what the effective precipitation represents. When is frac “high”, when is “frac” low?

ANSWER:

Extra Credit (2 pt): Combine the two plots into one, with a meaningfully scaled secondary y-axis**

Runoff transfer function

Routing module -

This short chunk sets up the TF used for the water routing. This part contains only two steps: 1) the calculation of the actual TF. 2) normalization of the TF so that the sum of the TF equals 1.

tau(0) is the mean residence time

# #gTF <- tau

#

# # this is the "raw" transfer function. This is eq 13 from Weiler 2003. Use the time step for tau. The Gamma function in R is gamma(). NOTE THAT THIS EQ IN WEILER ET AL IS WRONG! It's exp(-tau / b) and not divided by alpha.

# g <- (tau^(a - 1)) / ((b^a * gamma(a))) * exp(-tau / b)

#

# # normalize the TF, g, by the total sum of g.

# gTF <- g / sum(g)

#

# # plot the TF as a line against tau. You need to put the two vectors tau and gTF into a new df/tibble for this.

# tf_plot <- tibble(tau, gTF)

# ggplot(tf_plot, aes(tau, gTF)) +

# geom_line()3) QUESTION (2 pt): Why is it important to normalize the transfer function?

ANSWER:

4) QUESTION (4 pt): Describe the transfer function. What are the units and what does the shape of the transfer function mean for runoff generation?

ANSWER:

Convolution

This is the heart of the model. Here, we convolute the input with the TF to generate runoff. There is another thing you need to pay attention to: We are only interested in one year (365 days), but since our TF itself is 365 timesteps long, small parts of all inputs except for the first one, will be turned into streamflow AFTER the water year of interest, that is, in the following year. In practice, this means you have two options to handle this.

1) You calculate q_all and then manually cut the matrix/vector to the correct length at the end, or

2) You only populate a vector at each time step and put the generated runoff per iteration in the correct locations within the vector. For this to work, you would need to trim the length of the generated runoff by one during each iteration. This approach is more difficult to code, but saves a lot of memory since you are only calculating/storing one vector of length 365 (or 366 during a leapyear).

We will go with option 1). The code for option 2 is shown at the end for reference.

Convolution summarized (recall dollars and cookies): Each loop iteration results in a row of the matrix representing the convolution at a specific time step. each time step of p_eff is an iteration, and for each timestep, it multiplies the effective precipitation at that timestep by the entire transfer function. Then each row is summed and stored in the vector q_all. q_all_loop is an intermediate step in the convolution process and can help visualize how the convolution evolves over time. As an example, if we have precip at time step 1, we are interested in how this contributes to runoff at time steps 1,2,3 etc, all the way to 365 (or the end of our period). so the first row of the matrix q_all_loop represents the contribution of precipitation at timestep 1 to runoff at each timestep. the second row represents the contribution of precipitation at time step 2 to runoff at each timestep. Then when we sum up the rows, we get q_all, where each element represents the total runoff at a specific time step.

# tic()

# # preallocate qsim matrix with the correct dimensions. Remember that p_eff and gTF are the same length.

# q_all_loop <- matrix(0, length(p_eff) * 2, length(p_eff)) # set number of rows and columns

#

# # convolution for-loop

# for (i in 1:length(p_eff)) { # loop through length of precipitation timeseries

# q_all_loop[(i):(length(p_eff) + i - 1), i] <- p_eff[i] * gTF # populate the q_all matrix (this is the same code as the UH convolution problem with one tiny change because you need to reference gTF and not UH)

# }

#

#

# # add up the rows of the matrix to generate the final runoff and replace matrix with final Q

# q_all <- apply(q_all_loop, 1, sum, na.rm = TRUE)

#

# # cut the vector to the appropriate length of one year (otherwise it won't fit into the original df with the observed data)

# q_all <- q_all[1:length(p_eff)]

#

# # Write the final runoff vector into the PQ df

# PQ$Qsim <- q_all

# toc()5) QUESTION (5 pts): We set the TF length to 365. What is the physical meaning of this (i.e., how well does this represent a real system and why)? Could the transfer function be shorter or longer than that?

ANSWER:

# ## THIS PART SAVES ALL HOURLY Q RESPONSES IN A NEW DF AND PLOTS THEM

# Qall <- as_tibble(q_all_loop, .name_repair = NULL)

# Qall <- Qall[ -as.numeric(which(apply(Qall, 2, var) == 0))]

# toc()

#

# # Qall[Qall == 0] <- NA

#

# Qall <- Qall %>%

# mutate(Time_hrs = 1:nrow(Qall)) %>% # add the time vector

# gather(key, value, -Time_hrs)

#

# Qall$key <- as.factor(Qall$key)

#

#

# ggplot(Qall, aes(x = Time_hrs, y = value, fill = key)) +

# geom_line(alpha = 0.2) +

# theme(legend.position = "none") +

# lims(x = c(1, 365)) +

# labs(x = "DoY", y = "Q (mm/day")Plots

Plot the observed and simulated runoff. Include a legend and label the y-axis.

# # make long form

# PQ_long <- PQ %>%

# select(-wtr_yr, -RainMelt_mm) %>%

# pivot_longer(names_to = "key", values_to = "value", -Date)

#

# # plot hydrographs (as lines) and label the y-axis

# ggplot(data = PQ_long, aes(x = Date, y = value, color = key)) +

# geom_line() +

# labs(x = {}, y = "Q (mm/day)", color = {}) +

# lims(x = as.Date(c("2005-10-01", "2006-09-30")))6) QUESTION (3 pt): Evaluate how good or bad the model performed (i.e., visually compare simulated and observed streamflow, e.g., low flows and peak flows).

ANSWER:

7) QUESTION (2 pt): Compare the effective precipitation total with the simulated runoff total and the observed runoff total. What is p_eff and how is it related to q_all? Discuss why there is a (small) mismatch between the sums of p_eff and q_all.

ANSWER:

# sum(Pobs) # observed P

#

# sum(p_eff) # effective (modeled) P

#

# sum(q_all) # modeled Q

#

# sum(Qobs) # observed QTHIS IS THE CODE FOR CONVOLUTION METHOD 2

This method saves storage requirements since only a vector is generated and not a full matrix that contains all response pulses. The workflow here is to generate a a response vector for the first pulse. This will take up 365 time steps. On the second time step, we generate another response pulse with 364 steps that starts at t=2. We then add that vector to the first one. On the third time step, we generate a response pulse of length 363 that starts at t=3 and then add it to the existing one. And so on and so forth.

# # METHOD 2, Option 1

# tic()

# # preallocate qsim vector

# q_all <- vector(mode = "double", length(p_eff))

# # start convolution

# for (i in 1:length(p_eff)) {

# A <- p_eff[i] * gTF # vector with current Q pulse

# B <- vector(mode = "double", length(p_eff)) # reset/preallocate vector with 0s

# B[i:length(p_eff)] <- A[1:(length(p_eff) - i + 1)] # iteration one uses the full A, iteration 2 full lengthmodel minus one, etc

# q_all <- q_all + B # add new convoluted vector to total runoff

# }

# toc()

#

#

# # Method 2, Option 2

# tic()

# # preallocate qsim vector

# q_all_2 <- vector(mode = "double", length(p_eff))

# B <- vector(mode = "double", length(p_eff)) # preallocate vector with 0s

#

# # start convolution

# for (i in 1:length(p_eff)) {

# A <- p_eff[i] * gTF # vector with current Q pulse

# B[i:length(p_eff)] <- A[1:(length(p_eff) - i + 1)] # iteration one uses the full A, iteration 2 full lengthmodel minus one, etc

# q_all_2[i:length(p_eff)] <- q_all_2[i:length(p_eff)] + B[i:length(p_eff)] # add new convoluted vector to total runoff at correct locations

# }

# toc()

#

# # plot to show the two Q timeseries are the same

# # Create two ggplot objects

# plot_q1 <- ggplot(data=test_q_all, aes(x=time, y=q1)) +

# geom_line() +

# labs(title = "Q1 Plot")

#

# plot_q2 <- ggplot(data=test_q_all, aes(x=time, y=q2)) +

# geom_line(color="blue") +

# labs(title = "Q2 Plot")

#

# # Combine plots side by side

# combined_plots <- plot_q1 + plot_q2 + plot_layout(ncol = 2)

#

# # Display combined plots

# combined_plots